Harnessing AI for Enhanced Mobile App Performance

Today’s chosen theme: Harnessing AI for Enhanced Mobile App Performance. Explore practical strategies, vivid stories, and repeatable patterns that make apps faster, smoother, and more energy‑aware. If performance thrills you as much as great design, jump in, comment along the way, and subscribe for future deep dives.

Why AI‑Driven Performance Matters Now

A small travel startup trained an on‑device next‑screen predictor using anonymous navigation sequences. Their median time‑to‑interaction dropped significantly, and users described the experience as telepathic rather than merely fast. Engagement streaks lengthened, and cancellations decreased, illustrating how performance improvements compound into real business outcomes.

Predictive Caching and Smart Prefetch

Sequence models can observe navigation patterns and confidently guess likely next screens. By precomputing templates, prebinding view models, and warming caches, your app removes invisible friction. The trick is using probability thresholds, so prefetching happens when useful while avoiding unnecessary memory pressure during unpredictable user flows.

Predictive Caching and Smart Prefetch

Blend predictions with real‑time network signals. On Wi‑Fi, eagerly fetch images at higher resolution; on weak cellular, defer heavier assets while prefetching skeleton data. This adaptive strategy minimizes jank, trims spinners, and respects user data plans, proving performance and empathy can absolutely coexist within thoughtful mobile experiences.

Adaptive UI and Rendering with ML

A lightweight policy model can monitor frame timing, input bursts, and device thermals, then dial animation durations or disable nonessential effects. By adjusting just before contention, it guards the frame budget. Users notice a consistently smooth feel, even when background work or multitasking would otherwise cause stutter.

Intelligent Resource Scheduling

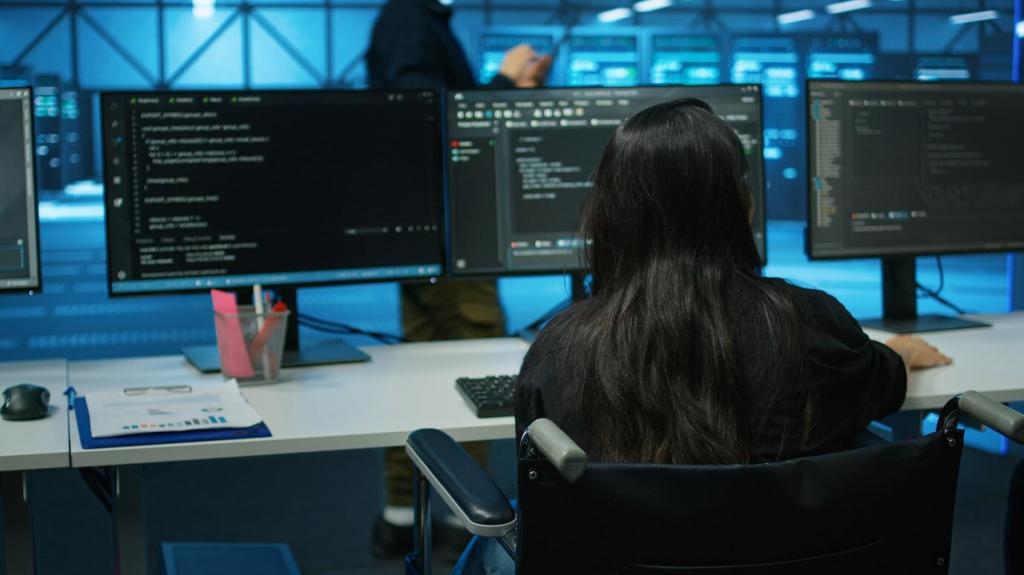

On‑Device Orchestrators

A bandit or reinforcement learning policy can route jobs across CPU, GPU, or neural accelerators, respecting heat and foreground priorities. By learning which combinations cause jank on specific devices, it schedules critical tasks at the right moments, reducing contention while keeping the interface fluid and reliably responsive to user input.

Battery‑Savvy Decisions

Train a simple energy predictor from historical telemetry to estimate cost per task. Then batch nonurgent work when charging, or align with radio wake windows. Users feel the difference when your app stays lively all day, avoiding power spikes that quietly push uninstall decisions after a few frustrating charging cycles.

Real Story: Checkout Surge

During a holiday rush, a retail app’s learned scheduler deferred thumbnail decoding and prioritized input handling around checkout. Instead of freezing, it cleverly smoothed taps, processed payments first, and backfilled visuals afterward. Support tickets dropped, and customers praised a calm checkout even while servers and devices ran near capacity.

Anomaly Detection and Self‑Healing Apps

Unsupervised models can flag anomalous crash clusters, latency spikes, or memory leaks before they spread. With device, OS, and network context, alerts become actionable rather than noisy. Engineers focus on patterns that matter, shaving days off triage and keeping potentially catastrophic regressions away from the majority of users.

Prefer on‑device inference and collect only the minimum signals necessary for performance learning. Techniques like federated learning and differential privacy help aggregate understanding without exposing individuals. Be explicit about purposes, retention, and controls. Trust gained here directly increases willingness to share diagnostics that actually improve experiences.

Offer clear toggles for performance analytics and explain benefits in plain language. Show users tangible wins—faster loads, fewer stalls—when they opt in. Provide a frictionless way to opt out anytime. Openness turns monitoring into a partnership rather than surveillance, and creates a healthier feedback loop for continuous improvement.

Let’s coauthor a lightweight ethical charter for AI‑driven performance work. What data is fair game, and what crosses the line? Add your principles and edge cases in the comments. We will synthesize them into guidance future teams can adopt, adapt, and proudly reference within their development handbooks.

Tooling and a Practical Roadmap

Evaluate TensorFlow Lite, Core ML, or ONNX Runtime for on‑device models, and ML Kit for vision tasks. Keep models small, updateable, and explainable. Align choices with your team’s language, platform mix, and release cadence. Avoid toolchains that lock you in or slow iteration, especially during early experimentation phases.

Tooling and a Practical Roadmap

Instrument performance events, define guardrails, and run AB tests with sequential analysis or bandits. Monitor cold start, time‑to‑interactive, jank, and energy. Tie results to retention and session length so wins are undeniable. Celebrate small deltas; repeated, they compound into experiences users immediately feel and willingly recommend to friends.